Introduction

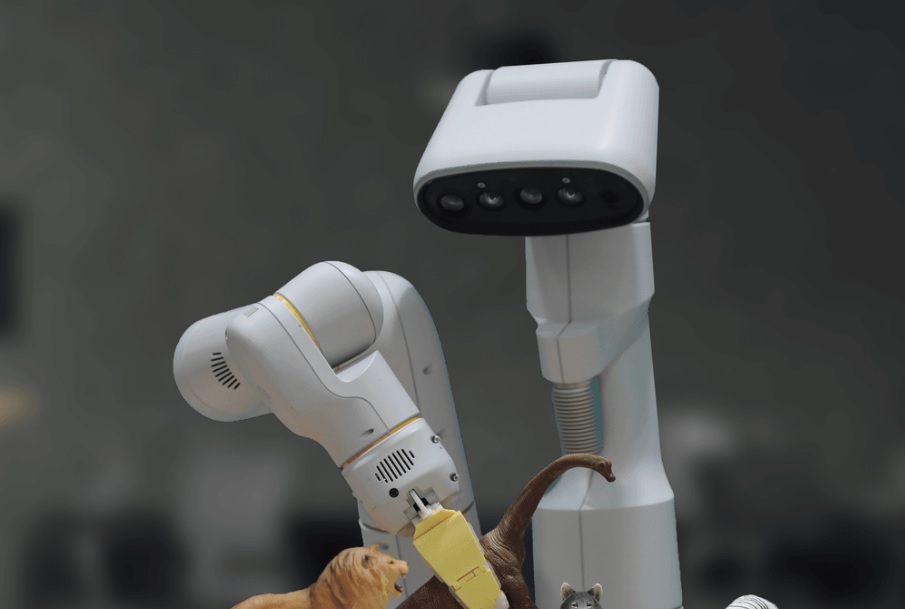

Robotic Transformer 2 (RT-2) is a groundbreaking vision-language-action (VLA) model that represents a significant advancement in the field of robotics. By learning from both web and robotics data, RT-2 translates this knowledge into generalised instructions for controlling robots, while retaining the web-scale capabilities of high-capacity vision-language models (VLMs). This article explores the development and capabilities of RT-2, shedding light on its impact on the future of robotic control.

| Step | Topic |

| 1 | Introduction to Robotic Transformer 2 (RT-2) |

| 2 | VLMs and their Applications in Robotics |

| 3 | Vision-Language-Action (VLA) Model: RT-2 |

| 4 | Training RT-2: Combining Web and Robotics Data |

| 5 | Generalisation and Emergent Skills in RT-2 |

| 6 | Multi-Modal Learning in RT-2 |

| 7 | RT-2: Advancements in Natural Language Processing |

| 8 | How is RT-2 Adapted for Robotic Control? |

| 9 | RT-2’s Impact on the Field of Robotics |

| 10 | RT-2’s Contribution to Vision-Language Models |

| 11 | The Future of Robotics with RT-2 |

Training RT-2: Learning from Web and Robotics Data

To equip robots with a similar level of competency as VLMs, they would traditionally need to collect first-hand data across every object, environment, task, and situation. However, RT-2 overcomes this challenge by learning from both web-scale data and robotic experiences. It builds upon its predecessor, Robotic Transformer 1 (RT-1), which was trained on multi-task demonstrations using robot data collected over 17 months in an office kitchen environment.

Improving Generalisation and Understanding

RT-2 exhibits improved generalisation capabilities and semantic understanding beyond the robotic data it was exposed to. It can interpret new commands and respond to user instructions by performing rudimentary reasoning, such as reasoning about object categories or high-level descriptions. The model’s ability to perform multi-stage semantic reasoning, like deciding which object to use as an improvised hammer, demonstrates its powerful cognitive capabilities.

Adapting VLMs for Robotic Control

RT-2 builds upon the Pathways Language and Image model (PaLI-X) and Pathways Language model Embodied (PaLM-E) to act as its backbone. To enable robotic control, actions are represented as tokens in the model’s output, similar to language tokens. This representation allows RT-2 to output actions as strings that can be processed by natural language tokenizers. The use of a string representation for robot actions allows VLM models to be trained on robotic data without requiring changes to their input and output spaces.

Emergent Skills and Generalisation

A series of qualitative and quantitative experiments on RT-2 showcased its emergent capabilities in symbol understanding, reasoning, and human recognition. The model demonstrated more than a 3x improvement in generalisation performance compared to previous baselines. RT-2 outperformed prior models on tasks with previously unseen objects, backgrounds, and environments, showcasing the benefits of large-scale pre-training.

Plan-and-Act Approach with Chain-of-Thought Reasoning

RT-2 effectively combines robotic control with chain-of-thought reasoning to achieve long-horizon planning and low-level skills within a single model. By fine-tuning the model to incorporate language and actions jointly, RT-2 can perform more complex commands that require reasoning about intermediate steps needed to accomplish user instructions. This approach enables visually grounded planning, making RT-2 a promising candidate for a general-purpose physical robot capable of reasoning and problem-solving.

Advancing Robotic Control with RT-2

RT-2 demonstrates that VLMs can be transformed into powerful VLA models capable of directly controlling robots. By leveraging both VLM pre-training and robotic data, RT-2 achieves highly improved robotic policies and exceptional generalisation performance and emergent capabilities. The model holds great promise for building versatile robots capable of performing a diverse range of tasks in the real world.

FAQ

1. What is Robotic Transformer 2 (RT-2)?

RT-2 is a novel vision-language-action (VLA) model that learns from both web and robotics data, enabling it to provide generalised instructions for robotic control.

2. How does RT-2 differ from its predecessor, RT-1?

RT-2 builds upon RT-1 by incorporating web-scale data and demonstrating improved generalisation capabilities beyond the robotic data it was exposed to.

3. How does RT-2 represent actions for robotic control?

RT-2 represents actions as tokens in its output, similar to language tokens, and describes actions as strings that can be processed by standard natural language tokenizers.

4. What are some of the emergent skills demonstrated by RT-2?

RT-2 exhibits emergent skills in symbol understanding, reasoning, and human recognition, resulting in significant performance improvements over previous baselines.

5. How does RT-2 plan long-horizon sequences of actions?

RT-2 combines robotic control with chain-of-thought reasoning, allowing it to plan long-horizon skill sequences and predict robot actions more effectively.

6. What backbone models are used in RT-2?

RT-2 builds upon the Pathways Language and Image model (PaLI-X) and Pathways Language model Embodied (PaLM-E) to serve as its backbone.

7. What are some real-world applications of RT-2?

RT-2’s capabilities open doors to various real-world applications, including robotic problem-solving, reasoning, and executing complex user instructions.

Step 8: How is RT-2 Adapted for Robotic Control?

Robotic Transformer 2 (RT-2) represents a remarkable advancement in the field of robotics by harnessing the power of vision-language models (VLMs) and adapting them for robotic control. In this article, we explore how RT-2 is adapted for controlling robots, enabling them to execute actions based on visual and language inputs.

1. Incorporating VLMs for Robotic Control

RT-2 is built upon VLMs that take one or more images as input and produce a sequence of tokens representing natural language text. To adapt these models for robotic control, actions are represented as tokens in the model’s output, just like language tokens. This representation allows RT-2 to generate action strings that can be processed by standard natural language tokenizers.

2. Representation of Robot Actions

The representation of robot actions is crucial for effective robotic control. RT-2 uses a discretised version of robot actions similar to its predecessor RT-1. Converting this representation into a string format makes it possible to train VLM models on robotic data without needing changes to their input and output spaces.

3. Fine-tuning and Augmentation

To enhance RT-2’s ability to use language and actions jointly, fine-tuning is performed on a variant of the model for a few hundred gradient steps. Additionally, the data is augmented to include an additional “Plan” step, where the purpose of the upcoming action is described in natural language, followed by “Action” and the corresponding action tokens.

4. Planning with Chain-of-Thought Reasoning

Chain-of-thought reasoning is a key aspect of RT-2’s approach. This technique allows the model to combine robotic control with planning long-horizon skill sequences. By augmenting the data with additional steps for planning, RT-2 can reason about intermediate actions needed to accomplish user instructions more effectively.

5. Visually Grounded Planning

Thanks to its VLM backbone, RT-2 can plan from both image and text commands. This capability enables visually grounded planning, where the robot uses visual cues along with language instructions to determine appropriate actions. This sets RT-2 apart from traditional plan-and-act approaches that rely solely on language without visual perception.

6. Benefits of Chain-of-Thought Reasoning

The incorporation of chain-of-thought reasoning allows RT-2 to perform more complex commands that require reasoning about intermediate steps. This empowers the model to tackle challenging tasks and execute actions in a more sophisticated manner.

7. Advantages Over Traditional Robotic Control

RT-2’s approach to adapting VLMs for robotic control offers several advantages. It leverages the power of pre-training on web-scale data and combines it with specific robotic experiences, leading to superior generalisation performance and emergent capabilities.

8. A Versatile Model for Real-World Scenarios

With its ability to reason, problem-solve, and interpret information, RT-2 proves to be a versatile model for real-world scenarios. It can perform a wide range of tasks, making it a valuable asset for robotics applications.

9. Expanding the Potential of Robotics

RT-2 represents a significant step forward in the evolution of robotics. By expanding the potential of vision-language models and adapting them for robotic control, RT-2 opens up new possibilities for intelligent and versatile robotic systems.

10. Future Directions for RT-2

As research and development continue, RT-2 holds promise for further advancements in robotic control. Future directions may involve exploring more complex reasoning capabilities, improving generalisation across diverse environments, and refining the model’s performance on real-world tasks.

Step 9: RT-2’s Impact on the Field of Robotics

Robotic Transformer 2 (RT-2) has made a significant impact in the field of robotics since its introduction. In this article, we delve into the various ways in which RT-2 has influenced and transformed the landscape of robotic research and applications.

1. Revolutionary Vision-Language-Action (VLA) Models

RT-2 is a pioneering vision-language-action model that combines the power of VLMs with robotic control. This revolutionary approach has sparked a new wave of research, pushing the boundaries of what robots can achieve by incorporating both visual perception and language understanding.

2. Enabling Generalised Robotic Control

One of RT-2’s key contributions is its ability to provide generalised instructions for robotic control. By learning from both web-scale data and specific robotic experiences, RT-2 can execute actions in diverse and previously unseen scenarios, showcasing its remarkable generalisation capabilities.

3. Advancements in Cognitive Robotics

RT-2’s cognitive abilities, including reasoning, problem-solving, and semantic understanding, have propelled the field of cognitive robotics. The model’s capability to interpret natural language instructions and translate them into precise actions demonstrates the potential for more sophisticated human-robot interactions.

4. Applications in Real-World Scenarios

The practical applications of RT-2 in real-world scenarios are far-reaching. From household chores to industrial automation, RT-2’s adaptability and versatility make it an invaluable tool for enhancing productivity and efficiency in various domains.

5. Bridging the Gap Between Vision and Language

RT-2 serves as a bridge between vision and language, enabling robots to process visual information and language inputs simultaneously. This integration unlocks new possibilities for robots to understand complex instructions and perform tasks with higher-level reasoning.

6. Promoting Research in Robotics and AI

The development of RT-2 has inspired a surge in research and collaboration among roboticists, AI researchers, and natural language processing experts. The cross-disciplinary nature of RT-2’s design has fostered innovation and accelerated progress in multiple fields.

7. Paving the Way for Human-Robot Collaboration

As robots become more capable and cognitively advanced, the potential for human-robot collaboration increases significantly. RT-2’s language understanding and generalisation capabilities are essential for enabling seamless teamwork between humans and robots.

8. Building Trust in Robotic Systems

Robotic systems powered by RT-2 offer increased reliability and safety due to their ability to reason and understand human instructions. This development is crucial for building trust in robots and ensuring their seamless integration into human-centric environments.

9. Impact on Autonomous Systems

RT-2’s impact extends beyond traditional robotics into the realm of autonomous systems. The model’s ability to learn from data and make decisions based on visual and language cues is fundamental to the development of self-driving vehicles and other autonomous technologies.

10. Shaping the Future of Robotics

The revolutionary capabilities of RT-2 are shaping the future of robotics. As the model continues to evolve and advance, it holds the promise of unlocking even greater potential for intelligent, autonomous, and socially aware robotic systems.

Step 10: RT-2’s Contribution to Vision-Language Models

Robotic Transformer 2 (RT-2) represents a significant milestone in the realm of vision-language models (VLMs). In this article, we explore the ways in which RT-2’s development has contributed to the progress and understanding of VLMs and their application in the field of robotics.

1. Integration of Vision and Language

RT-2’s success lies in its seamless integration of vision and language. By learning from both web-scale data and robotic experiences, RT-2 effectively connects visual perception with language understanding, enabling robots to process and respond to complex instructions.

2. VLMs for Robotic Control

RT-2’s adaptation of VLMs for robotic control is a groundbreaking achievement. The model’s ability to represent actions as tokens in its output allows it to generate action strings that can be processed by natural language tokenizers, facilitating the translation of language commands into robotic actions.

3. Advancements in Natural Language Processing

The development of RT-2 has pushed the boundaries of natural language processing (NLP) within the context of robotics. The model’s capability to comprehend and reason about natural language instructions demonstrates the potential for sophisticated human-robot communication.

4. Multi-Modal Learning

RT-2’s approach to learning from both web-scale data and robotics data exemplifies multi-modal learning. By leveraging information from different sources, the model gains a broader understanding of the world, enhancing its decision-making and problem-solving abilities.

5. Generalisation Across Environments

One of RT-2’s significant contributions is its improved generalisation capabilities across diverse environments. By training on both web-scale data and robotic data, RT-2 can execute actions in scenarios it has never encountered before, showcasing its ability to adapt to novel environments.

6. Cognitive Skills in VLMs

The emergence of cognitive skills in VLMs, as demonstrated by RT-2, is a pivotal development. The model’s ability to reason and perform complex actions based on visual-semantic concepts paves the way for more sophisticated AI systems.

7. Robotic Knowledge Transfer

RT-2’s successful knowledge transfer from web-based data to robotic control is an essential step in advancing the field of robotics. This capability enables robots to learn from a vast amount of web-scale information, facilitating their understanding of new scenarios.

8. Vision-Language Interaction

RT-2’s vision-language interaction sets the stage for future advancements in AI systems that seamlessly combine visual perception with language understanding. The model’s ability to plan from both image and text commands opens up new possibilities for AI applications.

9. Enhancing Robotic Perception

By leveraging VLMs for robotic control, RT-2 has significantly enhanced robotic perception. The model’s ability to process visual and language cues simultaneously improves robots’ understanding of the world and enables more effective decision-making.

10. Impact on AI Research

RT-2’s contributions to VLMs have had a broader impact on AI research. The model’s innovative approach and success in robotic control have inspired researchers to explore new possibilities for incorporating vision and language in various AI applications.

Step 11: The Future of Robotics with RT-2

Robotic Transformer 2 (RT-2) has opened new frontiers in the world of robotics and AI. In this article, we delve into the future implications and potential advancements that RT-2 brings to the field of robotics.

1. Advancing Human-Robot Collaboration

As RT-2 continues to evolve, it holds the potential to revolutionise human-robot collaboration. The model’s language understanding, generalisation capabilities, and cognitive skills enable more seamless and natural interactions between humans and robots.

2. Personalised Robotic Assistants

The development of RT-2 opens up the possibility of personalised robotic assistants. These assistants can comprehend and respond to individual users’ instructions, making them valuable assets in various settings, from homes to workplaces.

3. Ethical Considerations

As robotic systems become more advanced and integrated into society, ethical considerations become paramount. RT-2’s ability to reason and interpret language raises important questions about responsibility, safety, and accountability in the realm of autonomous systems.

4. Cross-Domain Applications

RT-2’s versatility makes it applicable across diverse domains, from healthcare to manufacturing and beyond. As the model’s capabilities are refined and expanded, it may find applications in fields beyond traditional robotics.

5. Augmenting Human Capabilities

RT-2’s potential to assist humans in complex tasks holds promise for augmenting human capabilities. By working in tandem with humans, RT-2 can amplify productivity and efficiency in various industries.

6. Real-World Robotic Agents

As RT-2 advances, the prospect of real-world robotic agents becomes increasingly feasible. These agents can navigate and interact with the physical world, paving the way for autonomous vehicles, delivery drones, and robotic assistants.

7. Continued Collaboration and Research

The success of RT-2 underscores the importance of collaboration between AI researchers and roboticists. As the field of robotics progresses, ongoing collaboration will be critical in developing ever more sophisticated and capable robotic systems.

8. Enhanced Understanding of AI Systems

The development of RT-2 contributes to a deeper understanding of AI systems, particularly in their ability to perceive and comprehend the world. This insight is instrumental in shaping the future of AI research and development.

9. Addressing Societal Challenges

As RT-2 and similar AI advancements continue to unfold, they have the potential to address societal challenges. From aiding in disaster response to supporting healthcare services, AI-powered robotics can make a significant positive impact.

10. Shaping the Human-Robot Future

Ultimately, the future of robotics with RT-2 lies in shaping a harmonious relationship between humans and robots. As AI systems become more integrated into daily life, the development of responsible, safe, and empathetic robotics is of paramount importance.

Leave a Reply